Header image: KF in Midjourney

Zuboff, S. (2022). Surveillance Capitalism or Democracy? The Death Match of Institutional Orders and the Politics of Knowledge in Our Information Civilization. Organization Theory, 3(3), 263178772211292. https://doi.org/10.1177/26317877221129290

- “Surveillance capitalism is what happened when US democracy stood down.”

- Zuboff decries “The abdication of the world’s information spaces to surveillance capitalism”

- “surveillance capitalist giants–Google, Apple, Facebook, Amazon, Microsoft, and their ecosystems now constitute a sweeping political-economic institutional order that exerts oligopolistic control over most digital information and communication spaces, systems, and processes.”

- “The commodification of human behavior operationalized in the secret massive-scale extraction of human-generated data is the foundation of surveillance capitalism’s two-decade arc of institutional development.”

- “Each stage creates the conditions and constructs the scaffolding for the next, and each builds on what went before”

- “Surveillance capitalism’s development is understood in the context of a larger contest with the democratic order––the only competing institutional order that poses an existential threat.”

- “While the liberal democracies have begun to engage with the challenges of regulating today’s privately owned information spaces,”

“I argue that regulation of institutionalized processes that are innately catastrophic for democratic societies cannot produce desired outcomes.”

- “[need for] the abolition and reinvention of the earlystage economic operations that operationalize the commodification of the human, the source from which such harms originate.”

- “Without new public institutions, charters of rights, and legal frameworks purpose-built for a democratic digital century, citizens march naked, easy prey for all who steal and hunt with human data.”

- “In an information civilization individual and collective existence is rendered as and mediated by information. But what may be known? Who knows? Who decides who knows? Who decides who decides who knows? These are the four questions that describe the politics of knowledge in the digital age.”

- “Right now, however, it is the surveillance capitalist giants–Google/Alphabet, Facebook/Meta, Apple, Microsoft, Amazon–that control the answers to each of these questions, though they were never elected to govern.”

KF: e.g. Don’t take all the cookies sign (Obedience to the Unenforcable)

- “an economic construct so novel and improbable, as to have escaped critical analysis for many years: the commodification of human behavior.”

- “As an economic power, surveillance capitalism exerts oligopolistic force over virtually all digital information and communication spaces”

- “When the economic operations that drive revenue are founded on the commodification of the human, the classic economic playing field is scrambled”

- “Surveillance capitalism is the young challenger, its pockets full of magic. Born at the turn of a digital century that it helped to create, its rapid growth is an American story that embodies many novel means of institutional reproduction.”

- “Chief among these has been its ability to keep law at bay. The absence of public law to obstruct its development is the keystone of its existence and essential to its continued success.”

- “Democracy is the old, slow and messy incumbent, but those very qualities bring advantages that are difficult to rival.”

- “cause-and-effect relationships between earlier novel economic operations and later dystopian harms to democratic governance and society.”

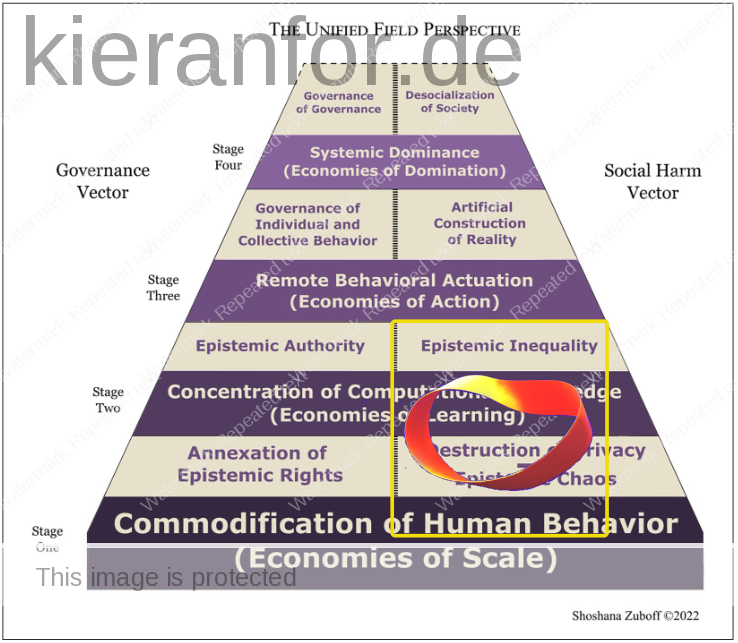

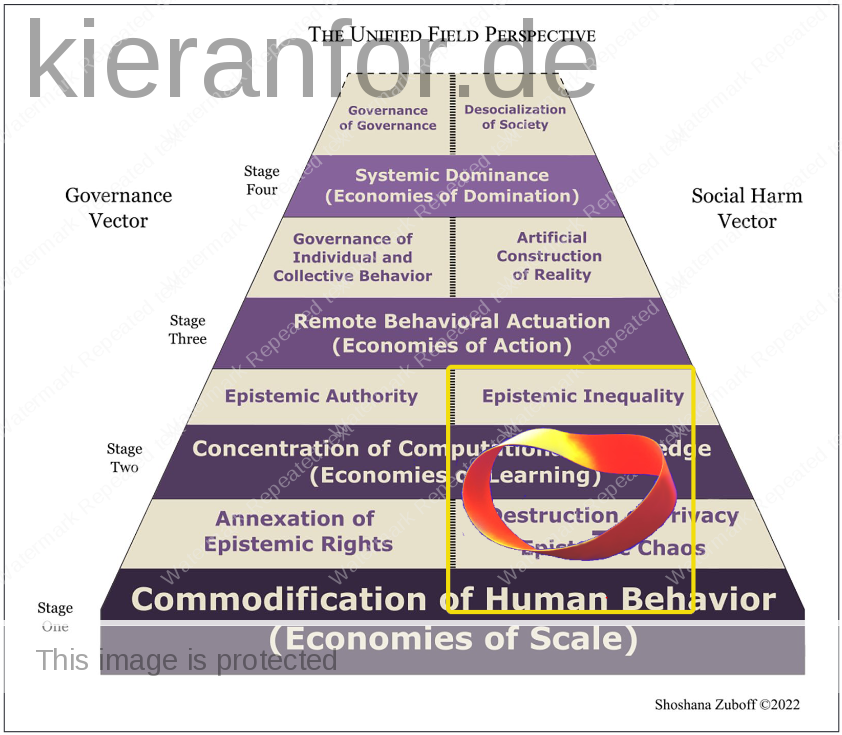

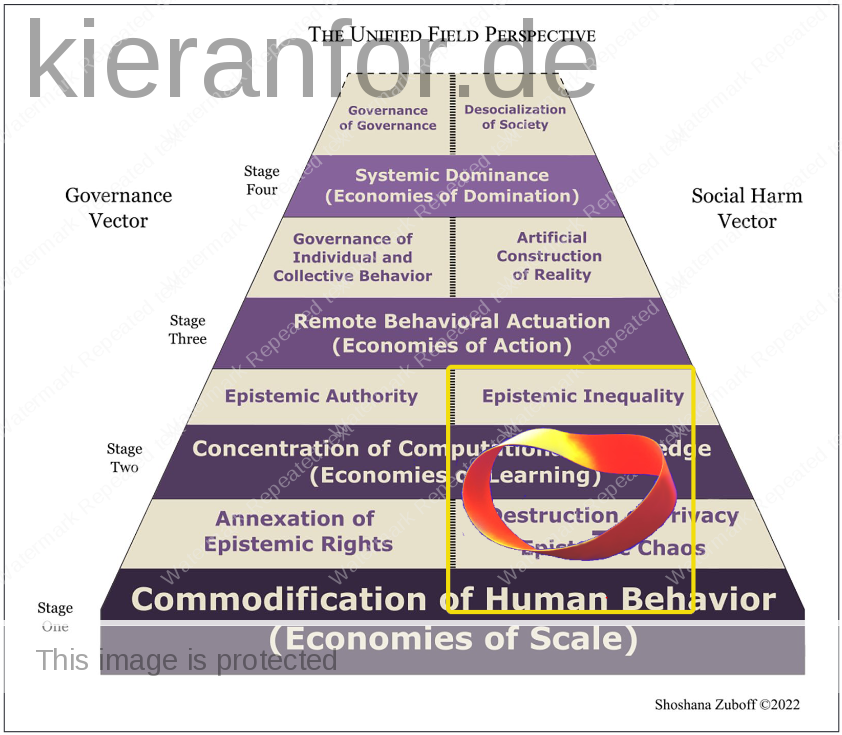

The Unified Field Perspective

- “social harms are siloed and treated as disparate crises.”

KF: The attraction of seeing these challenges as discrete issues might be understood as a coping mechanism for the seemingly insurmountable task of seeking to understand the bigger picture. While a comprehensive exploration of the nebulous issue of privacy is itself a daunting task, it is at least one way to address the larger systems at play.

How do you eat an elephant? One bite at a time. It is easy, or perhaps even inevitable, to give away to feelings of hopelessness and despair when one considers how completely enveloped we are by these technologies. In a way, the God-like omnipresence and omniscience of digital technology engender the same sense of awe and wonder, as well as the same impulse to submit to the unknown and unknowable power at play. To be so caught up inside a system leaves individuals feeling helpless. The democratic processes that might have stood in opposition to these powers are crumbling before us in real-time, as wars, economic turmoil, climate crises, and a host of other disasters-in-real-time appear before us.

The awesome power of the Gorgons has been harnessed by individual mortals to transfix and petrify the old order: Destroying / disrupting institutions “empowering the individual” (Perseus): Dividing – to conquer [Medusa was the only one of the three Gorgons who was mortal

- “The unified field perspective offers a solution for this fractured tower of Babel”

- “The four stages of surveillance capitalism’s institutional development are each identified by their novel economic operations. These are: The Commodification of Human Behavior; The Concentration of Computational Knowledge Production and Consumption; Remote Behavioral Actuation; and Systemic Dominance.”

- “We are taking what has been with the institution,” Cook says, “and empowering the individual”

- “regulatory disruption”

- “When Cook describes “empowering” the individual, he declares Apple’s prerogative to supplant existing institutions and laws. Apple Inc. asserts itself as the source of authority that “empowers” and therefore its equivalent authority to disempower individuals just as quickly with a flick of its terms of service or operating system”

KF: Shamima Begum

- “Cook’s false flag of liberation alienates the individual from society to obscure the inconvenient-to-Apple truth that only democratic society can authorize and protect the rights and laws that sustainably empower and protect individuals.”

- “Clay Christensen, originator of disruption theory”

- “odified principles of information integrity, truth telling, and factualization that a decade later would not be regarded as fusty nostalgia, but rather be as oases of rationality in a corrupted information hellscape”

- “J. Anderson & Rainie, 2020”

- “They envisioned new privately mediated relationships with “individuals” that first bypass institutions, then eliminate the functions that institutions were designed to protect, and ultimately render institutions irrelevant.”

- “fake education”

KF: Zuboff lays out how education can fall victim to the same processes that undermined the institution of journalism, paving the way for “fake education” the way the latter pave the way for “fake news” (p. 9). The holy grail of personalized learning, billed as ”empowering the individual” is merely “the eventual substitution of private computational governance for democratic governance” or as Watters pout it “…industrialization…”

- “the eventual substitution of private computational governance [RTBF] for democratic governance.”

- “[new social harms] calculated as the cost of institutional reproduction and treated as externalities.”

- “disorient, distract and fragment the democratic order.”

Foundational Stage One: The Commodification of Human Behavior (Economies of Scale)

The Economic Operations

- “the secret massive-scale extraction of human-generated data.”

- “these discarded behavioral traces were a surplus—more than what was required for product improvement.”

- “It launched the online targeted ad industry, best understood as surveillance advertising—”

- “If we did have a category,” he ruminated, “it would be personal information … Everything you’ve ever heard or seen or experienced will become searchable. Your whole life will be searchable”

KF: “To remember everything is a form of madness” – Searchable by who?

- “a loss leader for massive-scale extraction of “your whole life.””

- “Google’s inventions depended on the secret invasion of once-private human experience to implement a hidden taking without asking.”

KF: Hiding behind the service is that the product is you. We give you what you need so that we can take from you what we need: win-win. Becoming dependent on us, you need us all the more so that we can increasingly take from you what we please.

- “construct user profile information with methods that knowingly bypassed users’ agency, awareness, and intentions.”

- “UPI for a user … can be determined even when no explicit information is given to the system”

- “Anything that might “stir the privacy pot and endanger our ability to gather data” was scrupulously avoided .”

- “This surveillance dividend” $$$

- “The “click-through rate” was only the first globally successful prediction product, and online targeted advertising was the first thriving market in human futures. Surveillance capitalism was thus “declared” into being and the only witnesses to its birth were sworn to secrecy .”

- “One may take refuge in the fiction of choice with respect to a discrete decision to share specific information with a corporation, but that information is insignificant compared to the volume of behavioral surplus that is secretly captured, aggregated, and inferred.”

- “to the most intimate and predictive data residing in the two critical sectors of ⭐education ⭐ and healthcare.”

“Every product called “smart” and every service called “personalized” are now loss leaders for the human data that flow through them.”

- “Once downloaded, and no matter how apparently benign, [Apps] function as data mules shuttling behavioral signals from “smart” devices to servers primarily owned by the tech giants and the ad tech data aggregators”

Supply of Human-Generated Data

- “the “stunning pattern” of a legal environment “specifically shaped to accommodate” the internet companies”

- “The politics of knowledge defined the core of Hayek’s thesis. It was the intrinsic “ineffability” of “the market,” he argued, that necessitates maximum freedom of action for market actors, an idea that shaped the libertarian creed and its insistence on absolute individual freedom”

- “radical market freedom is justified as the one source of truth and the origin of political freedom”

- “As early as 1951 he worried that democratic “collectivism” would undermine “political democracy.”

- “Friedman saw the field of combat clearly: the market must dominate, democratic institutions must recede.”

- “By the late 1970s and for decades to follow, politicians and policy makers were expected to internalize contempt for their own power as they learned how to design, play, and defend a game in which the only rule was the absence of rules.”

- “Hong Kong … persuaded me that while economic freedom is a necessary condition for civil and political freedom, political freedom, desirable though it may be, is not a necessary condition for economic and civil freedom.”

- ““Mr. Friedman … never held elected office but he has had more influence on economic policy as it is practiced around the world today than any other modern figure.”

“With Friedman, “heresies had become the orthodoxy.” Friedman’s greatest skill was to imbue his radical ideas with the aura of inevitability”

KF: How to do this without saying it? / TINA V [“Total Information Awareness.”]

- “Former Google CEO Eric Schmidt celebrated this triumph on the first page of his 2014 book on the digital age when he described “an online world that is not truly bound by terrestrial laws … the world’s largest ungoverned space” .”

- “an extra-societal zone in which the norms and laws of real-world democracies do not apply.”

- “It denigrates to the point of caricature the US government’s role in the governance of digital information and communication spaces, insisting on minimal government involvement or intervention”

- ““industry self-regulation of privacy was not working for American consumers””

Demand for Human-Generated Data

- “With the attacks of September 11, 2001, everything changed. The new focus was overwhelmingly on security rather than privacy”

- “Total Information Awareness.””

- “If the intelligence community was to indulge its obsession to ascertain the future, then it would have to “rely on private enterprise to collect and generate information for it””

- “surveillance exceptionalism”

- Because action is weaker than institutions, Big Tech was compelled to surpass all prior lobbying records, including Big Oil and Big Tobacco.

- “Edward Snowden”

“all of it to collect the dots in the hope of one day connecting them”

- “US government practices of purchasing extraconstitutional data, such as location tracking, has been identified as a form of “data laundering” designed to evade Fourth Amendment protections”

- “This choice forfeited the crucial first decade of the digital century as an opportunity to advance a distinctly democratic digital future, while secrecy deprived the public of the right to debate and combat.”

The Governance Vector: The Annexation of Epistemic Rights

- “privacy as it was understood as recently as the year 2000 has been extinguished.”

“Elemental rights to private knowledge of one’s own experience, which I shall refer to as “self/knowledge,” were expropriated en masse,”

- “With the term “elemental,” I mean to mark a distinction between tacit rights and juridical rights”

- “For example, the ability to speak is an elemental right born of a human condition.”

- “Privacy involves the choice of the individual to disclose or to reveal what he believes, what he thinks, what he possesses”

- “The epistemic rights embedded in the “freedom to select” are thus accumulated as corporate rights.”

- “Though one does not grant Amazon knowledge of one’s fear, the corporation secretly takes it anyway,”

- “A new age has dawned in which individuals’ inalienable decision rights to self/knowledge must be codified in law if they are to exist at all.” (Obedience to the Unenforceable)

The Social Harm Vector (1): The Destruction of Privacy

“Most striking is that the destruction of privacy and all that follows from it has been perpetrated for the sake of the banality that is commercial advertising.”

- “In result, the battered but not broken privacy standard of the year 2000 now appears as the final hour of a long age of innocence.”

- “damage control after secret massive-scale extraction has been institutionalized”

KF: Zubov knows that contemporary discussion of privacy is merely “damage control after secret massive-scale extraction has been institutionalized”. Everyone alive today is compromised. One might also speculate that future generations are compromised as a consequence as the data on the living can be extrapolated to create potential profiles for the unborn.

What is needed is a firebreak. We need to create a space where the commodification of human experience cannot cross; we can do this by protecting the one space that is universally applicable – education [economic exclusion zone].

The Social Harm Vector (2): The Rise of Epistemic Chaos

- “In an information civilization, systemic threats to information integrity are systemic threats to society and to life itself.”

- “The early conflation of internet commerce and freedom of expression enabled the giants to defend their practices with a twisted notion of “free speech fundamentalism” that equated any discussion of stewardship with censorship and a denial of speech rights”

- “The engineering solution to “the engineering problem” was this”

- “blindness by design.”

- “information must not be confused with meaning.”

- “The result is automated human-to-human communications systems that are blind by design to all questions of meaning and truth.”

- “Every fundamental advance in symbolic media–the alphabet, mathematic notation, printed text–produced social and psychological upheaval. These conditions eventually required societies to bridge the gap between symbol and “reality” with explicit standards of information integrity and new institutions to govern those standards

- “The “death of distance,” once celebrated, imposes a new quality of distance expressed in the untraversable fissure between the sentient subject and “reality,” “truth,” “fact,” “meaning,” and related measures of information integrity. Fissure becomes chasm, as blind-by-design systems saturate society—systems that are not only antisocial but aggressively, eerily, asocial.”

- “Shannon declared the irrelevance of meaning to the engineering problem. Surveillance capitalism declares the irrelevance of meaning to the economic problem.”

KF: Electric telegraph; “Internalization of rules for commercial entities…. (Fast company);

- “I have called this institutionalized relationship to human-generated data “radical indifference”

- “to extract the predictive signals that reduce others’ uncertainty about what you will do next and thus contribute to others’ profit.”

EEPR: “engagement> extraction> prediction> revenue .”

- “Recent research demonstrates that Facebook amplifies misinformation because it drives EEPR. Median engagement with misinformation is higher than engagement with reliable information regardless of its political orientation. But because the bulk of misinformation is produced by publishers on the far right, its superior economic value means that right-wing misinformation is consistently privileged with every EEPR optimizing algorithmic amenity”

- “When social information is transmitted as a bulk commodity through blind systems optimized for volume and velocity, the result is epistemic chaos characterized by widespread uncontrollable information corruption.”

KF: CJD ⬆

- “They anticipate that increased democratic governance will trigger a doomsday scenario in which Facebook is categorically unable to comply at the required scale.”

- “The engineers compare their data flows to a bottle of ink poured into a lake. “How do you put that ink back in the bottle? How do you organize it again, such that it only flows to the allowed places in the lake?”” Machine unlearning

- “the engineers say that the company simply cannot comply. Worse still, the company cannot admit that it cannot”

“blindness by design is incompatible with any reasonable construction of responsible stewardship of global social communications.”

- “Their statement is an admission that information civilization has thus far been built on a novel genus of social information that blindly blends fact and fiction, truth and lies in the service of private economic imperatives that repurpose social information as an undifferentiated bulk commodity.”

- “This is a social experiment without historical precedent nihilistically imposed upon “subjects” who can neither consent nor escape because the experimenters and their manipulations are both hidden and ubiquitous”

- “The further downstream one goes, the more critical it becomes to recognize that the solutions are upstream, where the causes of harm originate.”

- “Genuine solutions will depend upon abolition and reinvention, not post-factum regulation.”

KF: moratorium, EEZ (to be widened), and protection. [Downstream ????]

Stage Two: The Concentration of Computational Knowledge Production and Consumption (Economies of Learning)

The Economic Operations

Grabbed all the data; poached all the researchers (“With so few teaching faculty, colleges and universities have had to ration computer science enrollments, which has significantly disrupted the knowledge transfer between generations”); “Secrecy, rather than patenting, remains the preferred strategy to protect their research findings”

The Governance Vector: Epistemic Authority

- The privatization of data about people and society in stage one founds the privatization of the means of knowledge production and consumption in stage two.

- concentration of data ➡ concentration of knowledge

What may be known? Who knows? Who decides who knows? In taking command of the answers, the giants exercise the epistemic authority to command the division of learning in society.

KF: Foucault?; church [religious] authority on knowledge/truth/salvation

The economic and governance gains of the surveillance capitalist order depend upon freezing every democratic impulse toward contradiction.

The Social Harm Vector: Epistemic Inequality

Information oligopoly shades into information oligarchy as it produces a wholly new axis of social inequality, expressed in the growing gap between the many and the few now defined by the difference between what I can know and what can be known about me.

- oligopoly: a state of limited competition, in which a market is shared by a small number of producers or sellers.

- oligarchy: a small group of people having control of a country, organization, or institution.

The individual and social sacrifices of stage one are not redeemed with service to the greater good in stage two

Stage Three: Remote Behavioral Actuation (Economies of Action)

In stage two, those unprecedented flows of trillions of data points each day converge with unprecedented computational capabilities to produce unprecedented concentrations of illegitimate though not illegal knowledge: epistemic inequality.

In stage three, the capabilities enabled by these conditions come to fruition in the transformation of illegitimate knowledge into illegitimate power.

- Cambridge Analytica; Brad Parscale, Trump’s digital director, had gambled his modest budget entirely on Facebook, where corporate staff members embedded within the campaign helped the Trump team dominate surveillance capitalism’s key operational mechanisms, referred to within Facebook as “the tools”

- The campaign identified three groups least likely to support Trump–idealistic white liberals, young women, and African Americans—and labeled these as “audiences” for “deterrence.” “The tools” were deployed to persuade these citizens, especially Black citizens, not to vote.

- convince Black citizens that the most effective expression of Black protest was simply not to vote.

- “Microtargeting” is the euphemism for the range of digital cueing mechanisms engineered to tune, herd and condition individual and collective behavior in ways that advance commercial or political objectives.

- Illegitimate knowledge is thus transformed into illegitimate power. Radical indifference means that even in a democracy, microtargeting and manipulation to deter voting is equivalent to microtargeting and manipulation to sell a new jacket.

The work here was accomplished by a specific form of epistemic power that I have called instrumentarian power. It is covertly wrung from massive asymmetries of knowledge, harnessed to the diminishment of human agency through the friction-free conquest of human action, and mediated by the Big Other of connected, pervasive, blind-by-design architectures of digital instrumentation

- Instrumentarian power is an affordance of surveillance capitalism, available to own or rent. It works its will invisibly. No violence. No blood. No bodies. No combat.

KF: Digital Mercenaries; bloodless coups; [Cyber-mercenaries are the rage; most of their data is scraped from the giants (Bradshaw et al., 2021).]

The Economic Operations

- Facebook; Frances Haugen;

- The News Feed algorithm was a social communications system engineered to follow the math not the meaning of interactions, just as Shannon had prescribed for his machines.

- Behavioral effects spread widely and quickly as every individual user, publisher, organization, or troll farm found themselves chasing the proof of life that Facebook’s validating metrics provided.

- The Common Ground team disbanded, and many senior staff involved in the remediation efforts left the company. Employees were told that Facebook’s priorities had shifted “away from societal good to individual value”

The Governance Vector: The Governance of Collective and Individual Behavior

[Zukerberg] strikes this key or that from his celestial perch, and qualities of human behavior and expression rise or fall. Anger is rewarded or ignored. News stories are more trustworthy or unhinged. Corrupt information is showcased or sidelined. Publishers prosper or wither. Political discourse turns uglier or more moderate. People live or people die.

- the hypothesis that the corporation is now engaged in a mega-massive-scale contagion experiment, this time trained on triggering social contagions of polarization.

- These interventions appear capable of evoking, selecting and reinforcing behavior in ways that disrupt human agency, alter behavioral trajectories, and abrogate “the right to the future tense” (Zuboff, 2019, pp. 329–348).

- the affordances of the surveillance capitalist order reconfigure information warfare (for commercial or political purpose) as a market project.

The fusion scenario is now vividly on display in a United States where state-level abortion bans turn law enforcement and judicial authorities toward data from the giants as a means of transforming pregnant women into prey

If today communities of color, activists, and now the vast category of pregnant women and their allies can become targets of information warfare, then tomorrow it can and will be any and all persons or groups. “First they came for … ”

- reimagine, reinvent and reclaim our information civilization for a democratic future nourished by data, information, and knowledge. ????????♂️

The Social Harm Vector: The Artificial Construction of Reality

When Zuckerberg decided that the destruction of privacy should be the new norm, and he “just went for it,” that was a profound violation of the norms that express commonsense knowledge. It was a form of terrorism from which the democratic order failed to protect its peoples.

Laurence Tribe tirelessly explains that First Amendment rights do not apply to Facebook’s discourse, because its social media spaces are not public spaces and do not operate under public law. They exist under the jurisdiction of private capital, not governmental authority. Tribe writes, “The First Amendment, like the entire Bill of Rights, addresses only government action, not the action of private property owners. That’s not a bug but a feature”

- a process of unnatural selection dictated by surveillance capitalism’s political-economic objectives

Stage Four: Systemic Dominance (Economies of Domination)

The Economic Operations

The giants’ control over critical digital infrastructure is leveraged as a means of weakening and then usurping the governance prerogatives of the democratic state.

e.g. COVID

- The UK’s National Health Service (NHS) announced a data-sharing agreement with Google, Microsoft, and the secretive data mining analytics firms Palantir and Faculty. Described as “the largest handover of NHS patient data to private corporations in history,” the NHS claimed the companies would provide a “single source of truth” with which to track the pandemic

- “Pan European Privacy Protecting Proximity Tracing” (PEPP-PT) V “Decentralized Privacy-Preserving Proximity Tracing (DP-3T)”

- The language choice of “decentralized” versus “centralized” was a public relations coup that produced an immediate media explosion in a Europe already on edge.

- There were technical hurdles. Without the cooperation of the two technology giants that control the critical digital infrastructure of Europe’s—and most of the world’s—smartphone operating systems, it would be impossible to implement either DP-3T or PEPP-PT effectively and at scale.

- The risks associated with trust were not eliminated but reassigned [“empowering the individual”]

- Apple & Google agree to inveslt in Decentralized Privacy-Preserving Proximity Tracing (DP-3T)”

- In a bizarre twist, this move cast both corporations as privacy champions defending Europe from the most comprehensive privacy protections on Earth.

“Arguments against centralized systems have been overly exaggerated, and the ones in favor of decentralized systems have been oversold to a level that we found unethical … In the former case, trust is based on a democratic system. In the latter case, trust is based on a commercial system.”

The Governance Vector: The Governance of Governance

- “even in the face of so much death and disease these companies currently have the power to constrain the choices of democratically elected sovereign states. They are the gatekeepers of society.””

- “If we are not strong enough to apply our own laws in the real world to the internet, then what is the use of the state?“

The Social Harm Vector: The Desocialization of Society

In the development of systemic dominance, the taking is no longer confined to data, knowledge, or even the raw power of behavioral modification. Here the taking extends to the living bonds of trust.

Economies of domination obscure the central insight upon which the very idea of democracy stands: only society can guarantee individual rights

- [KF: Thatcher? – “Society is not, then, as has often been thought, a stranger to the moral world … It is, on the contrary, the necessary condition of its existence” (Durkheim, 1964, p. 399).]

╬╬╬╬╬╬╬╬╬╬╬╬

Conclusion: The Golden Sword

The abdication of these information and communication spaces to surveillance capitalism has become the meta-crisis of every republic because it obstructs solutions to all other crises.

[cf. slavery / child labor] the challenge again shifts from regulation to abolition as the only realistic path to reinvention.

- first freeze surveillance capitalism’s institutional development, then inhibit its reproduction and shift the global trajectory from dystopia to hope.

- the lawful abolition of secret massive-scale extraction is democracy’s Golden Sword that can interrupt the power source upon which all surveillance capitalism’s destructive economic operations, governance takeovers, and social harms depend.

- Abolition of these already illegitimate operations means no annexation of epistemic rights, no wholesale destruction of privacy, and no industrialized tons of behavioral signals flowing through the blind-by-design systems required to accommodate their scale and speed.

KF: Zuboff’s proposed solution is fantastic, in the literal sense: a thing of fantasy. I cannot imagine any condition under which the US tech giants might be compelled to cease their data collection. The barrage of reasons why this could not happen would likely be tipped with the “national security” angle: “We cannot stop collecting data unless other countries stop doing so too”. As such, we would need to engage in discussions akin to SALT, given that we currently seem to be on a trajectory of MAD. [It’s the same as the call for a moratorium on LLMs]

Zuboff’s Fourth Law: Information is only as useful to society as the institutions, rights, and laws that govern its production and use.7

- It frees us to begin again in the spaces sold cheap by Clinton and Gore in 1997 and sold again in 2001.