Header image: KF in Dall-E

Commentary in relation to John Fletcher Moulton. (1924). Law and Manners. The Atlantic. ➡ https://www2.econ.iastate.edu/classes/econ362/hallam/NewspaperArticles/LawandManners.pdf

I first came across the term “Obedience to the Unenforceable” in an Al-Anon meeting a few years ago and its message has stayed with me since. Written 100 years ago, Moulton’s words seem like they might have been written (for) today. I’ll note a few highlights below and then add some commentary.

∞

Read the full text here: see a few highlights below.

Between Positive Law and Free Choice, lies the realm of Obedience to the Unenforceable [AKA “Duty, Public Spirit, Good Form, Manners“]. Moulton tells us:

- The degree of this sense of a lack of complete freedom in this domain varies in every case.

- It grades from a consciousness of a Duty nearly as strong as Positive Law, to a feeling that the matter is all but a question of personal choice.

- It is a land of freedom of action, but in which the individual should feel that he was not wholly free.

- The obedience is the obedience of a man to that which he cannot be forced to obey.

- He is the enforcer of the law upon himself

- It is doing that which you should do although you are not obliged to do it

- The real greatness of a nation, its true civilization, is measured by the extent of this land of Obedience to the Unenforceable.

- It measures the extent to which the nation trusts its citizens

- Between ‘can do’ and ‘may do’ ought to exist the whole realm which recognizes the sway of duty, fairness, sympathy, taste, and all the other things that make life beautiful and society possible.

∞

One might consider Obedience to the Unenforceable in relation to The Shopping Cart Theory or to people playing music through speakers on the bus, but I gave some thought to it in relation to the Right to Be Forgotten (RTBF) and Large Language Models (e.g. Chat-GPT)

When you invent a new technology, you uncover a new class of responsibility, and it’s not always obvious what those responsibilities are

The Three Rules of Humane Tech

I went to a talk recently, given by a visiting scholar at our university. On the way into the room, there was coffee and cookies available for the attendees. Now, there was no sign stating that each attendee could only have one or two cookies. It was simply expected that nobody was going to come along, take the whole plate, and tip all the cookies into their bag. I could have done just that and I’m not sure anyone would have said anything if I had. Indeed, I may not have even been noticed doing this but, even if I had, what rule was I breaking?

The RTBF is one example where regulation had to scramble to deal with the impact of the permanence of digital memory on people’s daily lives. The advent of LLMs presented a newer and greater challenge, because there was no explicit [enforceable?] rule/law in place such that a company couldn’t scrape data from the whole internet and integrate it into a large language model. In the same way that nobody thought it necessary to put up a sign at the talk that said “one cookie per attendee”, nobody thought it necessary to regulate against the whole of the internet being swallowed; regulation is often absent where Obedience to the Unenforceable is present, but also where it is beyond the ken of those responsible that such regulation is even necessary.

Now, if I had taken all the cookies, there may well have been a sign in place at the next event suggesting that people should take one/two cookies. While this would come after the fact, in the case of the chocolate-chip cookies, this is an adequate solution.

In the case of LLMs, this is not a solution; it seems that “machine UNlearning” is far trickier than “machine learning”; an analogue might be attempting to remove the presence of one particular chicken from a chicken nugget [see AI, AI, and chicken nuggets 🐔].

Once again, we are responding to the impact of technology that is introduced by way of a vast societal experiment (as is usually the case). Again, technology is determining the law, as each new tool highlights the need for its own regulation. However, during the period before such technologies are regulated, where Obedience to the Unenforceable is the guiding principle, society finds itself in an increasingly vulnerable situation given that these newer emerging technologies have the power to dramatically alter, or even end, our species in the very short term. The urgency of this issue is underscored when the industry in question is itself crying out for regulation, seeming having realized that they have the tiger by the tail. [All this, before we even mention the environmental impact of AI systems]

So, there it is. In Moulton’s words “Between ‘can do’ and ‘may do’ ought to exist the whole realm which recognizes the sway of duty, fairness, sympathy, taste, and all the other things that make life beautiful and society possible.”

With commercial interests being blind to Obedience to the Unenforceable, and given the power and sophistication of these emerging technologies, is it only a matter of time before our species in annihilated for want of…manners?

∞

(June 28, 2024) Microsoft’s AI boss thinks it’s perfectly okay to steal content if it’s on the open web

https://www.theverge.com/2024/6/28/24188391/microsoft-ai-suleyman-social-contract-freeware

- When CNBC’s Andrew Ross Sorkin asked [Microsoft AI boss Mustafa Suleyman] whether “AI companies have effectively stolen the world’s IP,” he said:

I think that with respect to content that’s already on the open web, the social contract of that content since the ‘90s has been that it is fair use. Anyone can copy it, recreate with it, reproduce with it. That has been “freeware,” if you like, that’s been the understanding.

See also, Harris, T. & Raskin, A. (Hosts). (2023, March 24). The AI dilemma [Audio podcast episode]. In Your Undivided Attention. Centre for Humane Technology. https://www.humanetech.com/podcast/the-ai-dilemma

(July 14, 2023) Framework for Legislating on Artificial Intelligence https://www.eff.org/files/2023/07/24/2023.07.14_ai_two_pager.pdf

- Passing a Law to Mandate Compensation for Machine Learning Would be Disastrous

- Congress must avoid creating new rights in copyright law to force compensation in the development of AI tools

- …if a compensation regime was mandated on the training sets, it would prevent low-cost AI tools from emerging and may even prevent well established firms from experimenting.

∞

(May 29, 2024) EU ChatGPT Taskforce: a road to GDPR enforcement on AI? https://www.techradar.com/computing/cyber-security/eu-chatgpt-taskforce-a-road-to-gdpr-enforcement-on-ai

- The group analyzed several problematic aspects of the popular AI chatbot, especially around the legality of its web scraping practices and data accuracy.

- GDPR requires a legal basis for processing personal data—in this case, either asking for the individual’s consent or having a “legitimate interest” in doing so. OpenAI cannot ask for consent to scrape your information online. That’s why, after the Italian case, the company is largely playing the latter card.

- The report ultimately stresses the importance of EU citizens’ rights under GDPR, like the right to delete or rectify your data (known as the “right to be forgotten“) or the right to obtain information on how your data is being processed.

∞

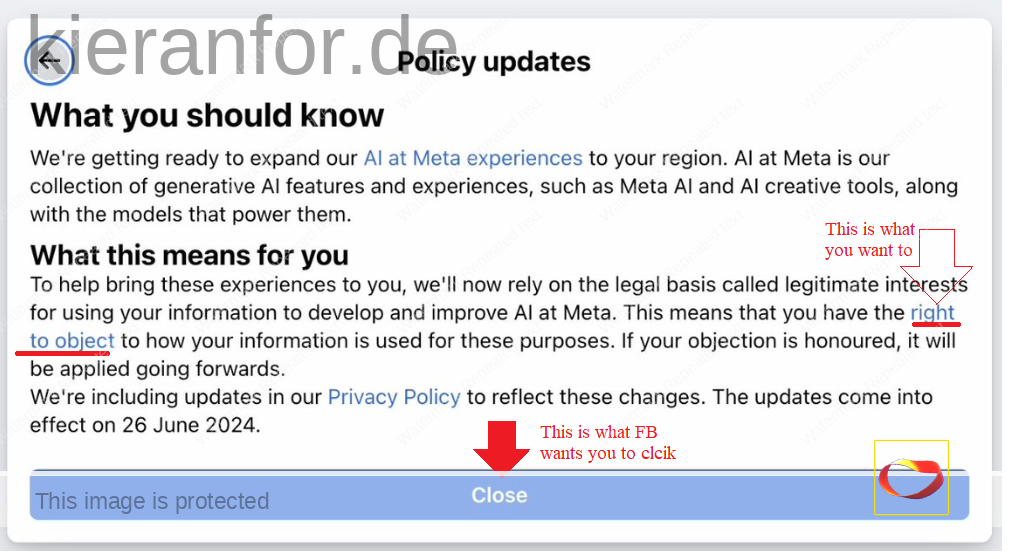

(May 26, 2024) Meta’s [shitty] new notification informing us they want to use the content we post to train their AI models.

https://threadreaderapp.com/thread/1794863603964891567.html

∞

(Feb 20, 2024) Generative AI’s environmental costs are soaring — and mostly secret

https://www.nature.com/articles/d41586-024-00478-x

- Last month, OpenAI chief executive Sam Altman finally admitted what researchers have been saying for years — that the artificial intelligence (AI) industry is heading for an energy crisis. It’s an unusual admission.

- At the World Economic Forum’s annual meeting in Davos, Switzerland, Altman warned that the next wave of generative AI systems will consume vastly more power than expected, and that energy systems will struggle to cope. “There’s no way to get there without a breakthrough,” he said.

For another time…

It is this confusion between ‘can do’ and ‘may do’ which makes me fear at times lest in the future the worst tyranny will be found in democracies.

John Fletcher Moulton. (1924). Law and Manners. The Atlantic. https://www2.econ.iastate.edu/classes/econ362/hallam/NewspaperArticles/LawandManners.pdf

Archive: https://archive.is/Rhoil