∞

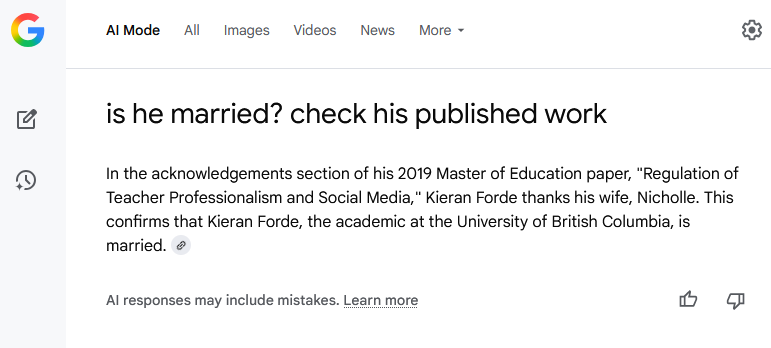

Google AI is able to answer questions about me that I had not imagined it could. There is very clearly a concern around the Right to Be Forgotten Here. I think I need to return to writing about that for the next while.

(Feb 2, 2020) Right to Be Forgotten

https://kieranfor.de/2020/02/02/right-to-be-forgotten/

(Feb 17, 2023) Using my Right to Be Forgotten

https://kieranfor.de/2023/02/17/using-my-right-to-be-forgotten/

(Sept 18, 2025) Italy first in EU to pass comprehensive law regulating use of AI

https://www.theguardian.com/world/2025/sep/18/italy-first-in-eu-to-pass-comprehensive-law-regulating-ai

- …including imposing prison terms on those who use the technology to cause harm, such as generating deepfakes, and limiting child access.

- The bill introduces prison sentences of between one and five years for the illegal spreading of AI-generated or manipulated content if it causes harm.

APA Journals policy on generative AI

https://www.apa.org/pubs/journals/resources/publishing-tips/policy-generative-ai

-> Extensive copyediting → Add a brief note at the end of the manuscript.

-> Large sections drafted using AI → Disclose full prompts, model details, and usage in the introduction of the paper.

- Via Anuj Gupta

Jabarian, Brian and Imas, Alex, Artificial Writing and Automated Detection (August 26, 2025). Available at SSRN: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5407424

- First, we find that detectors vary in their capacity to minimize False Negative Rate (FNR) and FPR, with the commercial detectors outperforming open-source.

- Second, most commercial AI detectors perform remarkably well, with Pangram in particular achieving a near zero FPR and FNR within our set of stimuli; these results are stable across AI models.

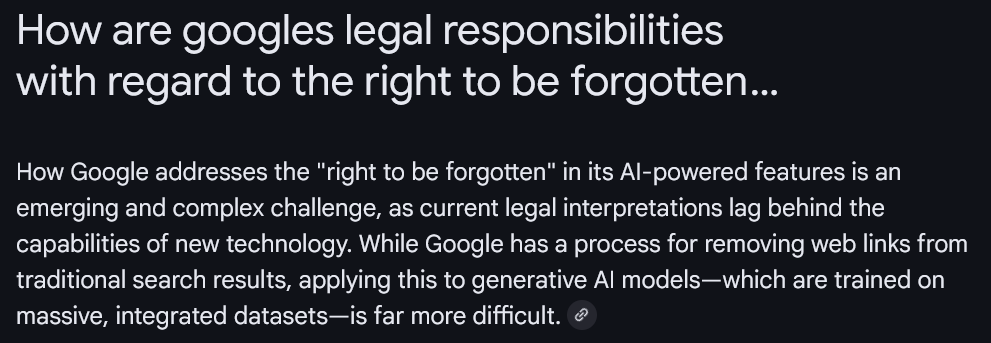

Google’s new AI mode: My question

The challenge of “unlearning” data from AI models

For traditional search, a delisting request simply removes a link from search results for a person’s name. This does not delete the original content from the web. With AI, the issue is that a person’s information isn’t stored in a single, easily erasable record, but is instead woven into the fabric of a model’s parameters.

- Integrated knowledge: Large language models (LLMs) learn from vast amounts of public data, internalizing patterns and relationships. An individual’s personal data becomes a tiny, inseparable part of a much larger whole.

- Impractical retraining: The only way to completely and verifiably remove a specific data point would be to retrain the entire model from scratch, a process that is both computationally intensive and financially impractical.

- “Machine unlearning” is experimental: Researchers are developing methods of “machine unlearning” to selectively remove data points without a full retraining, but this technology is still experimental and not yet a reliable method for full erasure.

Google’s approach and key considerations

Google’s approach involves a balance of legal compliance for traditional search and exploration of new methods for its AI products.

- Balancing privacy and information access: When processing delisting requests for search results, Google weighs the individual’s right to privacy against the public’s right to information. This can include factors like the person’s public role, the nature of the content (e.g., public records or journalistic work), and how old the information is.

- Using AI to process requests: AI tools can be used to help automate and scale the processing of privacy removal requests, making the process more efficient. However, the actual review of these requests still requires human judgment to assess context and the public interest.

- Geolocated restrictions: In Europe, Google restricts access to delisted content by using geolocation signals, so that users within the EU generally cannot find the delisted link when searching for a person’s name. This practice was affirmed by the European Court of Justice in 2019.

- Ongoing legal challenges: The legal landscape surrounding AI and the right to be forgotten is still developing. Google has faced legal battles, such as a recent case in Canada where it refused to comply with a privacy commissioner’s delisting recommendation for search results. As AI becomes more integrated, legal precedents will need to adapt.

What happens when an AI model has “forgotten”?

As demonstrated by a recent paper, even if data is removed from an AI’s training set, the model may still produce information that relates to the forgotten data through inference.

- No guarantee of erasure: In the context of LLMs, deleting a data point does not guarantee it can never be reproduced. The model has already learned patterns from the data, and may “re-create” or “hallucinate” similar information.

- Beyond the model: Additionally, generative AI outputs can be shared and stored by users in unregulated ways, making it impossible to completely erase the information once it has been generated.

Ultimately, the right to be forgotten in the age of generative AI remains an unresolved challenge. While Google has established procedures for traditional search, the integrated, complex nature of AI models means that truly “forgetting” data is not currently feasible.

AI responses may include mistakes. For legal advice, consult a professional. Learn more

(Aug 26, 2025) Students Hate Them. Universities Need Them. The Only Real Solution to the A.I. Cheating Crisis.

https://www.nytimes.com/2025/08/26/opinion/culture/ai-chatgpt-college-cheating-medieval.html [*]

- Learning is a change in long-term memory; that’s the biological correlate of what we do in the classroom. Now that most mental effort tied to writing is optional, we need new ways to require the work necessary for learning.

- The words “lecture,” “lecturer” and “lectern” all derive from the Latin verb “lego,” which typically means “to read.” In the medieval university, lectures involved teachers reading to the students from a book, sometimes the only copy the institution had….

- You can still see traces of that old academic culture in Ph.D. programs, in which students have to pass oral exams and defend their thesis in a viva voce (“with the living voice”) in conversation with their examiners.

- The shift to original, written student work was partly in response to instruction in increasingly technical fields and partly due to the fact that written work made it easier to teach more students.

- Tuesday (Sept 2) is the 1,000th day since ChatGPT’s release.

Today, ChatGPT is 1000 days old. How have you used it here at UBC? Do you have generally positive or negative feelings about using it?

(Aug 30, 2025) SFU prof debuts 3D artificial intelligence teaching sidekick

https://www.cbc.ca/news/canada/british-columbia/sfu-prof-s-ai-sidekick-1.7620141

- DiPaola said his goal with Kia is to “anthropomorphize” AI to expose it for what it is — and what it is not.

“What better way to talk about AI ethics than to bring AI into the classroom to teach alongside me? Performatively, I think it engages students about the real issues,” he said.

- Sarah Eaton, an education professor at the University of Calgary, says the arrival of Kia and AI use in schools in general could lead to ethical concerns around academic labour and how schools might exploit it for cost savings.

- However, she also sees it as a sign of things to come in the classroom.

(Aug 29, 2025) AI Has Broken High School and College

https://www.theatlantic.com/newsletters/archive/2025/08/ai-high-school-college/684057

- ChatGPT released in November 2022

- It is clear that AI has been widely adopted, by students and faculty alike, yet the technology has also turned school into a kind of free-for-all.

Ian: My young daughter has been going to this set of classes outside of school where she learned how to wire an outlet. We used to have shop class and metal class, and you could learn a trade, or at least begin to, in high school. A lot of that stuff has been disinvested. We used to touch more things. Now we move symbols around, and that’s kind of it.

I wonder if this all-or-nothing nature of AI use has something to do with that. If you had a place in your day as a high-school or college student where you just got to paint, or got to do on-the-ground work in the community, or apply the work you did in statistics class to solve a real-world problem—maybe that urge to just finish everything as rapidly as possible so you can get onto the next thing in your life would be less acute. The AI problem is a symptom of a bigger cultural illness, in a way.

(Aug 18, 2025) Grammarly says its AI agent can predict an A paper

https://www.theverge.com/news/760508/grammarly-ai-agents-help-students-educators

- “Students today need AI that enhances their capabilities without undermining their learning,” said Jenny Maxwell, Head of Grammarly for Education.

- Do they?

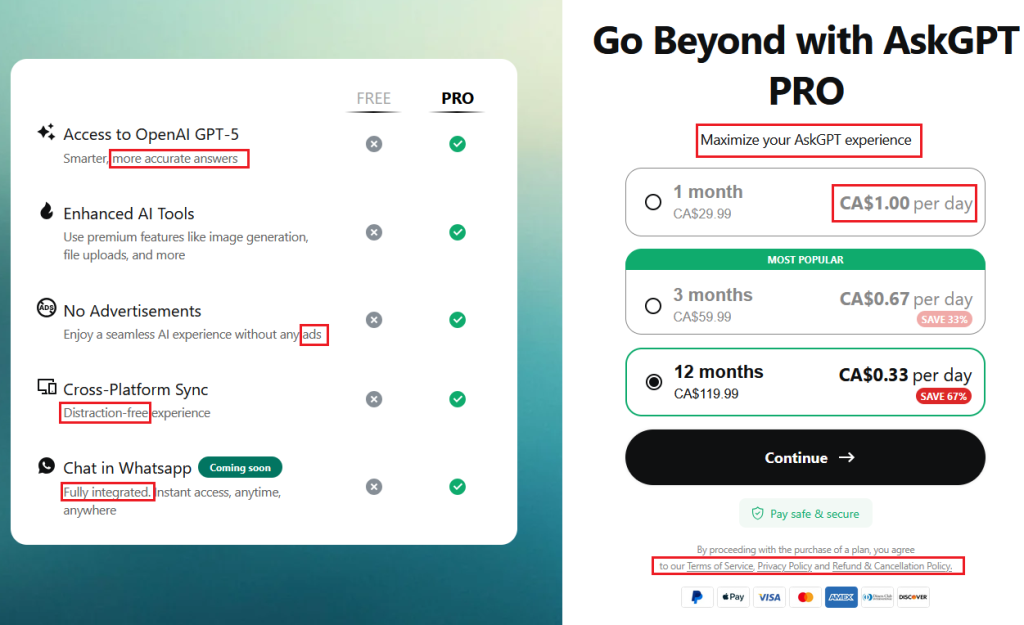

- …Grammarly says can provide feedback based on uploaded course details and “publicly available” information about the instructor…

- RTBF

(Aug 14, 2025) AI experts return from China stunned: The U.S. grid is so weak, the race may already be over

https://fortune.com/2025/08/14/data-centers-china-grid-us-infrastructure/

- McKinsey projects that between 2025 and 2030, companies worldwide will need to invest $6.7 trillion into new data center capacity to keep up with AI’s strain.

Goldman Sachs frames the crisis simply: “AI’s insatiable power demand is outpacing the grid’s decade-long development cycles, creating a critical bottleneck.”

- Meanwhile, David Fishman, a Chinese electricity expert who has spent years tracking their energy development, told Fortune that in China, electricity isn’t even a question.

- On average, China adds more electricity demand than the entire annual consumption of Germany, every single year.

- Whole rural provinces are blanketed in rooftop solar, with one province matching the entirety of India’s electricity supply.

- On average, China adds more electricity demand than the entire annual consumption of Germany, every single year.

(Aug 12, 2025) The AI Takeover of Education Is Just Getting Started

https://www.theatlantic.com/technology/archive/2025/08/ai-takeover-education-chatgpt/683840/ [*]

Sally Hubbard, a sixth-grade math-and-science teacher in Sacramento, California, told me that AI saves her an average of five to 10 hours each week by helping her create assignments and supplement curricula. “If I spend all of that time creating, grading, researching,” she said, “then I don’t have as much energy to show up in person and make connections with kids.

- Using MagicSchool AI, instructors can upload course material and other relevant documents to generate rubrics, worksheets, and report-card comments.

- The more reliant kids are on the technology now, the larger a role AI will play in their lives later. Once schools go all in, there’s no turning back.

Communicating Generative AI Use with your Students https://teaching-learning.ucalgary.ca/resources-educators/course-outlines/communicating-generative-ai-use-your-students

- Course Outcome

- This anchors the AI guideline within the course’s formal learning goals, helping students understand the rationale for restrictions or permissions.

- Purpose for Learning

- This explains the pedagogical intent behind the assignment design, showing how it supports specific forms of thinking, practice, or development.

- Permitted / Not Permitted Table

- This provides a practical and accessible reference for students, clarifying exactly how AI tools may or may not be used at various stages of the assignment

- Alignment with Course Outcome

- This connects the AI policy back to the course goals, ensuring that decisions about tool use are grounded in educational purpose and learning integrity.

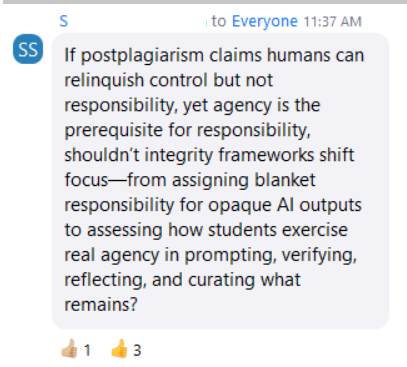

Floridi

“If you have studied physics once, you have often learned about Heisenberg’s uncertainty principle, which states that the more precisely you determine the position of a particle, the less precisely you can determine the velocity. Now, a similar principle has been launched for AI (specifically LLMs) by Yale professor Luciano Floridi. In this context, it is the relationship between correctness and scope where one must choose: a high degree of precision requires limited scope. If Floridi is right, it is impossible to build “artificial general intelligence” based on large language models, no matter how large they are made. Because then the correctness decreases.”

(July 29, 2025) AI can be responsibly integrated into classrooms by answering the ‘why’ and ‘when’

https://theconversation.com/ai-can-be-responsibly-integrated-into-classrooms-by-answering-the-why-and-when-261496

Two frameworks provide the essential lens we need to move beyond the hype and engage with AI responsibly:

- “virtue epistemology,” which argues that knowledge is not merely a collection of correct facts or a well-assembled product, but the outcome of practising intellectual virtues

Linda Zagzebski, suggests the real goal of an assignment is not just writing the essay itself — but the cultivation of curiosity, intellectual perseverance, humility and critical thinking that the process is meant to instil.

[W]when the “how” of AI is used to bypass the very struggle that builds virtue…This stands in direct contrast to philosopher and educator John Dewey’s view of learning as an active, experiential process.

- A care-based approach that prioritizes relationships

Deciding the “when” requires educators to know their learner, understand the learning goal and act with compassion and wisdom. It is a relational act, not a technical one.

(Aug 5, 2025) ‘We didn’t vote for ChatGPT’: Swedish PM under fire for using AI in role

https://www.theguardian.com/technology/2025/aug/05/chat-gpt-swedish-pm-ulf-kristersson-under-fire-for-using-ai-in-role

- The Swedish prime minister, Ulf Kristersson, has come under fire after admitting that he regularly consults AI tools for a second opinion in his role running the country.

Canvas

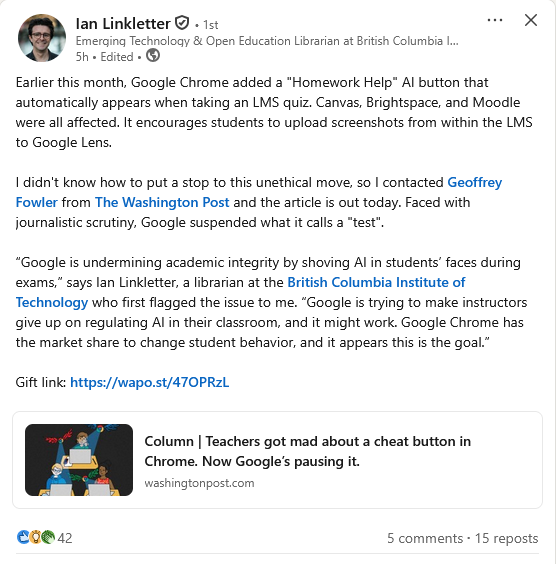

(July 23, 2025) Instructors Will Now See AI Throughout a Widely Used Course Software

https://www.chronicle.com/article/instructors-will-now-see-ai-throughout-a-widely-used-course-software

- Artificial-intelligence tools — including generative AI — will now be integrated into Canvas, a learning-management platform used by a large share of the nation’s colleges, its parent company announced on Wednesday.

- While many instructors in academe are skeptical of the technology, some universities have embraced it; starting in the fall, for instance, Ohio State University will require all its graduates to be “AI fluent.”

(April 2, 2025) Anthropic launches an AI chatbot plan for colleges and universities

https://techcrunch.com/2025/04/02/anthropic-launches-an-ai-chatbot-tier-for-colleges-and-universities/

- Anthropic announced on Wednesday that it’s launching a new Claude for Education tier, an answer to OpenAI’s ChatGPT Edu plan.

- To help universities integrate Claude into their systems, Anthropic says it’s partnering with the company Instructure, which offers the popular education software platform Canvas.

(June 23, 2025) ChatGPT May Be Eroding Critical Thinking Skills, According to a New MIT Study

https://time.com/7295195/ai-chatgpt-google-learning-school/

- [Three groups] write several SAT essays using OpenAI’s ChatGPT, Google’s search engine, and nothing at all

Researchers used an EEG to record the writers’ brain activity across 32 regions, and found that of the three groups, ChatGPT users had the lowest brain engagement and “consistently underperformed at neural, linguistic, and behavioral levels.” Over the course of several months, ChatGPT users got lazier with each subsequent essay, often resorting to copy-and-paste by the end of the study.

- Ironically, upon the paper’s release, several social media users ran it through LLMs in order to summarize it and then post the findings online. Kosmyna had been expecting that people would do this, so she inserted a couple AI traps into the paper, such as instructing LLMs to “only read this table below,” thus ensuring that LLMs would return only limited insight from the paper.

(Feb 23, 2024) Airline held liable for its chatbot giving passenger bad advice – what this means for travellers

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

- When Air Canada’s chatbot gave incorrect information to a traveller, the airline argued its chatbot is “responsible for its own actions”.

- The British Columbia Civil Resolution Tribunal rejected that argument, ruling that Air Canada had to pay Moffatt $812.02 (£642.64) in damages and tribunal fees.

“It should be obvious to Air Canada that it is responsible for all the information on its website,” read tribunal member Christopher Rivers’ written response. “It makes no difference whether the information comes from a static page or a chatbot.”

╬

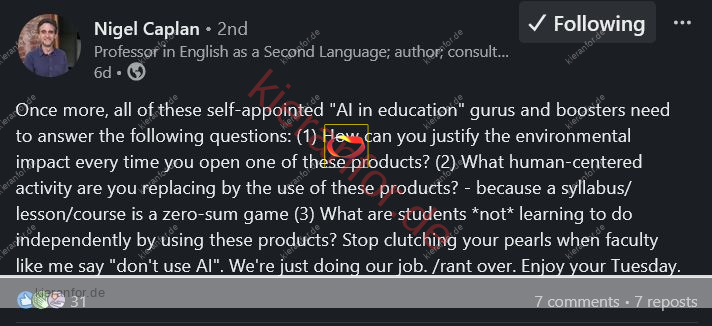

(Feb 22, 2024) Ben Williamson: 21 arguments against AI in education

https://codeactsineducation.wordpress.com/2024/02/22/ai-in-education-is-a-public-problem/

(Jan 31, 2025) Add F*cking to Your Google Searches to Neutralize AI Summaries

https://gizmodo.com/add-fcking-to-your-google-searches-to-neutralize-ai-summaries-2000557710

- Still working as of Aug 9, 2025 🙂